-

Decision-Focused Learning

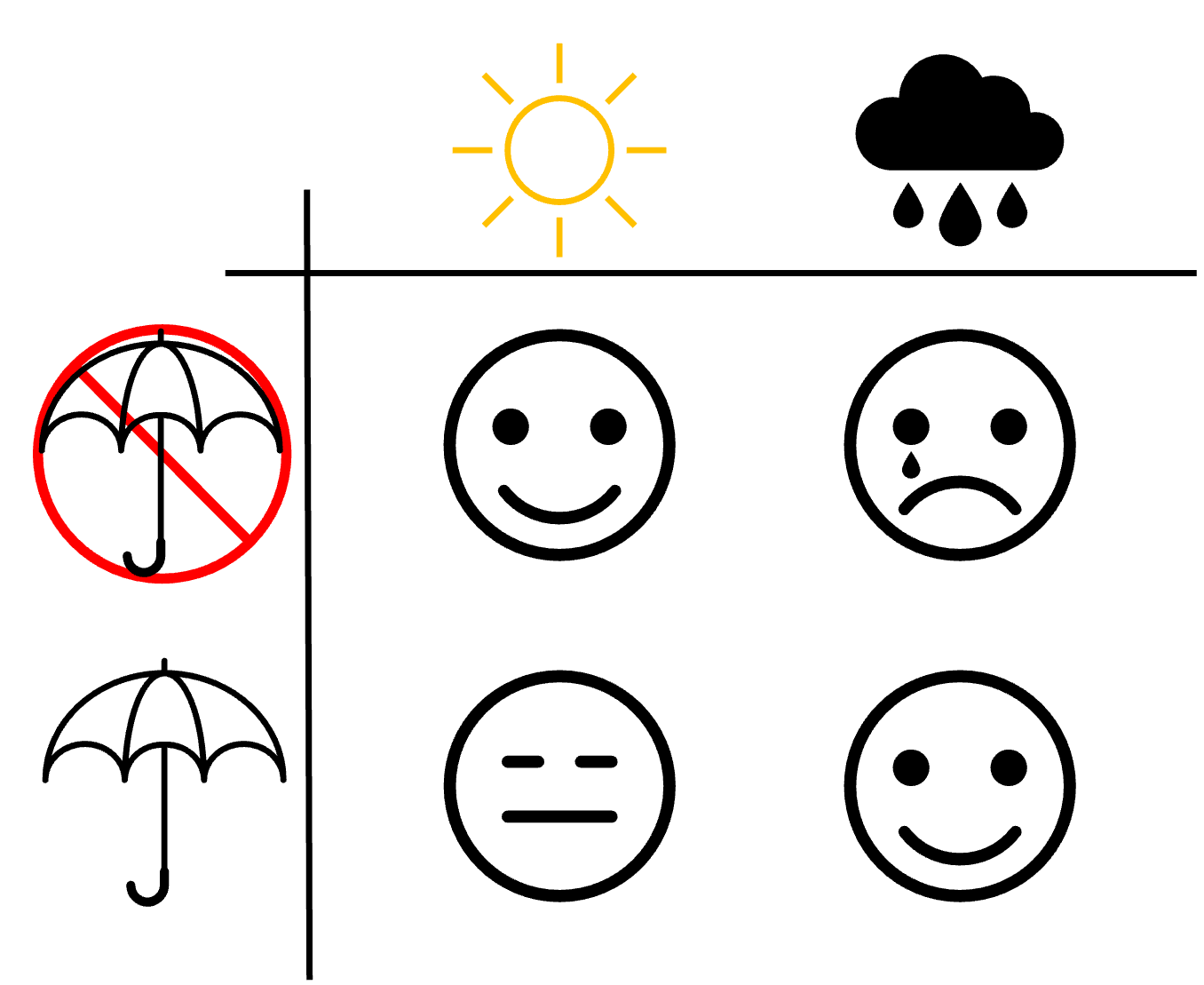

read more...Every day we make decisions based on uncertain information. Living in the UK, a fantastic example of this is if you should take an umbrella with you. Weather forecasts are notorious. They can predict clear a blue sky and it ends up bucketing it down. That’s why most of the time I have an umbrella in my bag, just in case. Its a regret minimising decision, in other words, the cost of bring my umbrella with me and not needing it is much lower than needing it and not having it. Here is a fun picture to outline the possible states:

If we were to naively automate this system using a typical predict-then-optimise approach there would be two steps:

- Create a rain prediction model that outputs a yes or no to if it will rain.

- Create an optimisation process (in this case just a check to see if rain is predicted) to decide if I need an umbrella or not.

Im sure you can see a number of issues and some obvious ways to improve this approach. We could do more that just predict a binary variable, \(p \in {0, 1}\), for if it will rain, we could predict a probability or how heavy the rain will be. Doing so would complicate our optimisation step, perhaps now we use a threshold value. However, this doesn’t address the underlying issue of, what if the prediction is wrong? The optimisation process as no knowledge its using predictions, and more importantly, the model has learned to minimise its prediction error (\(L2\)) with no regard for the optimisation. Unlike the predict-then-optimise approach, Decision-Focused Learning is the process of teaching machine learning models to generate predictions that when used to make a decision minimise regret.

-

GPU Accelerated Genetic Algorithms

read more...I have started using Genetic Algorithms (GA) in my PhD research. They are a very effect and simple tool used to solve optimisation problems. Using a “natural selection” like proses to explore the solution space they can quickly arrive at a good candidate solution. The nice thing about GAs is that they are very easy to implement. However, a basic implementation can be a bit slow.

In deep learning (DL) to speed up the computation we make heavy use of GPUs (and it some cases TPUs). A lot of work has been doing DL with JAX. parallelise If you don’t know, JAX is an autograd and XLA library for python. It allows you to write python code with the numpy API that can be compiled, vectorised, and run on GPUs. Inspired by a blog post from Will Whitney outlining a cool way to use

jax.vmapto train multiple models at once. I asked myself, could I use the same technique to speed up my GA and run it on the GPU? It turns out, with a few minor caveats, you can! Check out the code on my github -

String Formatting

There are a few ways to do string interpolation in python:

value = "abc" # All output `"value=abc"` "value={0}".format(value) "value={name}".format(name=value) f"{value=}" # you can do multi line with s = ("this is split up " "over multiple lines " f"and has {value=} in" ) # Dealing with numbers seconds = 0.00001 f"{seconds:.3}the format is

<digits before the .>.<digits after the .><type> -

Weather Forecasting

read more...If the tag-line of this post doesn’t make any sense then you might not be up to speed of the current trend in deep learning, the transformer. I have been aware of the transformer architecture for a while now. I spend time at work exploring LLMs like bart, T5, and like everyone else have seen the impressive GPT-3 demo from openAI. So, over christmas I decided it was time to dig into the detail and truly get upto speed.

The original use case of the transformers is for NLP, in the original paper it was used for sequence to sequence machine translation. However, I’m not an NLP expert. I also know transformers are data hungry and I have easy access to a lot of satellite imagery (about 300GB). Fundamentally, the attention / transformer architecture just deals with sequences and there have been work to use them for images e.g ViT. So why not use them to try and do weather forecasts?

-

Serialise Objects in Python

I often have data stored in python objects that aren’t dataframes. Think config objects. Saving and loading these data objects to and from disk is often very helpful. The easiest way to save an arbitrary object is to use

pickel, however you almost always shouldn’t. It is a security nightmare and in the worst case can lead to arbitrary code execution. A safer alternative is to serialize the data:import json s = json.dumps(dict_object) dict_obj = json.loads(s)There are also

dumpandloadthat work directly with files and not string objects.I have also recently come across pyserde. A super cool library that allows the serialisation of

dataclassobjects. -

Return of the blog

It’s a new year! So I should probably make some resolutions.

Currently all my writing is either academic or email / teams messages. I want to write more. Essays and long form have always been something I have struggled with (yes I too question why I would do a Ph.D.). I think it is in part because of to my dyslexia. I can be a bit self-conscious about spelling issues etc. It’s also hard work. But like exercises I’m sure if you regularly use the muscles it gets easier (at least I want to believe that). So, I think it’s time to bring back the blog!

My goal should hopefully be fairly easy:

- 8 total posts (this one counts so only 7 to go)

- 4 long reads, any post that is long enough to need sections. They can be about anything, research projects, PhD life, work.

- I also would like to add some short posts, they could just be code snippets or a quick idea / observation.

Wish me luck.

-

Hits or Spam

read more...As part of the big website overhaul, I recently added Google analytics to the website. The reason I haven’t for so long is that, as far as I’m concerned, I don’t get any page views so why measure what’s not there. However, much to my surprises, over the two weeks I have got about 15 visitors. I know it’s not a lot but the interesting thing is where they are from. There were / are readers in The USA, Germany, China and Australia.

-

Get fit

read more...I have been at University for about 6 weeks now. In that time I have come to realise it is very easy to be unhealthy. I happen to be in catered accommodation so I don’t have to worry about food, at least that’s what I thought, but it seems like nutrition isn’t something uni catering cares about. The only thing I seem to be eating is carbs, all day, every day. On top of that all of the snacks I can easily get are unhealthy (the vending machine only has sweets and soft drinks).

-

A farewell to autoCrat

read more...As many of you know (since I have one reader its everyone), I have been maintaining autoCrat on behalf of New Visions for the last year or so. Sadly I am announcing that its time to step down. It has been a fantastic experience and I have learned a ton working with the Cloud Lab team.

-

Introducing Drekyll!

read more...Note: I have deprecated Drekyll

So im new to Jekyll and so far I’m loving it, I already use it for two of my sites (this and TeenTech’s website). My only complaint is that I couldn’t find a good Markdown editor. I’m Dyslexic and my spelling is atrocious, when i’m writing lots of text I want to use an editor with good spell check and all the other niceties. The trouble is most text editors, such as Sublime or Textwrangler, are use primarily for coding. As such they have no or a very limited spell check. My first thought was to use MS Word as a text editor but it turns out that editing Markdown files in Word doesn’t work all that well. It was when I was playing around with some autoCrat stuff that it hit me. I could use Google docs!