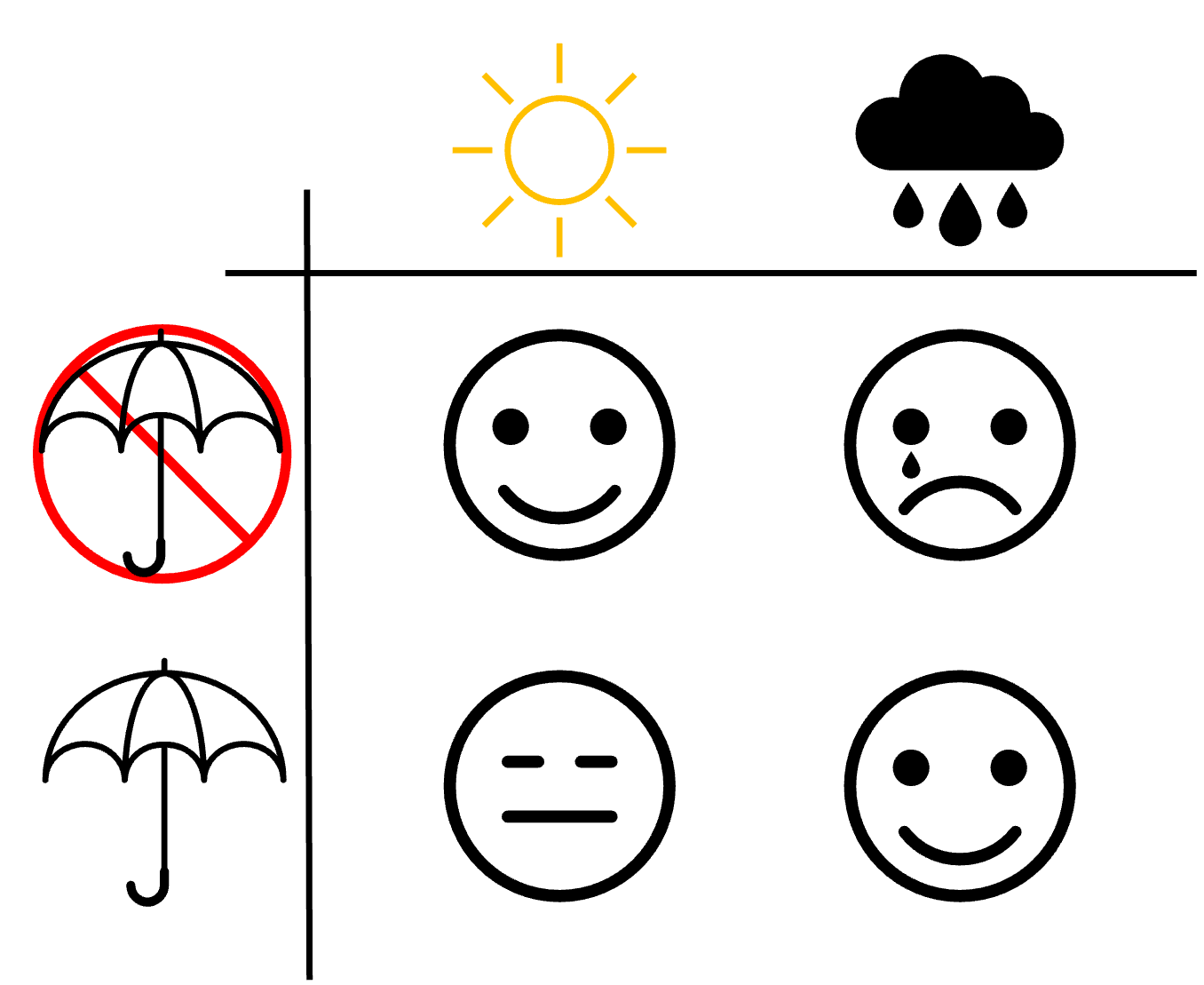

Every day we make decisions based on uncertain information. Living in the UK, a fantastic example of this is if you should take an umbrella with you. Weather forecasts are notorious. They can predict clear a blue sky and it ends up bucketing it down. That’s why most of the time I have an umbrella in my bag, just in case. Its a regret minimising decision, in other words, the cost of bring my umbrella with me and not needing it is much lower than needing it and not having it. Here is a fun picture to outline the possible states:

If we were to naively automate this system using a typical predict-then-optimise approach there would be two steps:

- Create a rain prediction model that outputs a yes or no to if it will rain.

- Create an optimisation process (in this case just a check to see if rain is predicted) to decide if I need an umbrella or not.

Im sure you can see a number of issues and some obvious ways to improve this approach. We could do more that just predict a binary variable, \(p \in {0, 1}\), for if it will rain, we could predict a probability or how heavy the rain will be. Doing so would complicate our optimisation step, perhaps now we use a threshold value. However, this doesn’t address the underlying issue of, what if the prediction is wrong? The optimisation process as no knowledge its using predictions, and more importantly, the model has learned to minimise its prediction error (\(L2\)) with no regard for the optimisation. Unlike the predict-then-optimise approach, Decision-Focused Learning is the process of teaching machine learning models to generate predictions that when used to make a decision minimise regret.

The maths

Machine learning

The typical machine learning (ML) process is defined as follows. Given a set of pairs of features and labels: \(\mathcal{D} = \{(x_1,y_1), (x_2,y_2) \ldots (x_n, y_n)\}\) where \(x_i\) is an $nd$ feature vector \(x = [f_1, f_2 \dots f_n]\) sampled from \(X\) and its values have some correlation to \(y_i\)

Assuming that it exists, we want to learn the true function \(\mathcal{F}(x_i) = y_i\). However, this isn’t possible, so we use the data, $D$, to learn a model \(M\) where \(M(x_i) \rightarrow \hat{y_i}\) that minimises a loss function $\mathcal{l}$ between $y$ and $\hat{y}$. A typical loss function might look something like $l = (\hat{y_i} - y_i)^2$. For a parametrised model the learning process looks like

\[\theta^* = \operatorname*{argmin}_\theta \frac{1}{n} \sum_{i=0}^n l(y_i, M_\theta(x_i)) \label{eq:ch0:ml-learning}\]where \(\theta^*\) would be the optimal parameters for the model given the data available.

Optimisation

In a classical optimisation process, we attempt to find the optimal solution \(z*\) to a problem. \(z*\) is typically defined as being drawn from the set of all valid solutions \(z* \in Z\). Though in some notation \(Z\) can be the space of all possible solutions, valid or not and constraints are applied to the optimization process. We will use \(Z\) for the valid solution space and \(\mathcal{Z}\) for the larger space of all possible solutions. In math notation, \(Z \in \mathcal{Z} \quad s.t \; g_i(z)\leq 0, \textrm{for} \: i \in \{1, \ldots, m\} \;\forall z \in Z\) where \(g\) is a constraint on the solution.

The optimality of a solution is measured using a fitness function \(f\). We can define the optimisation process as

\[z^*= \operatorname*{argmin}_{z \in Z} f(z) \label{eq:ch0:opt}\]Of note here is that \(f\) can often be computationally expressive or \(Z\) so large it is infeasible to try all possible solutions. As such, methods must be used to reduce the search space to find the solution. This is typically a combination of heuristics (often defined using domain expertise) and advanced search methods such as genetic algorithms. In fact, the machine learning problem defined in [\ref{eq:ch0:ml-learning}] is an optimisation problem.

In the case of the ML problem with a parametrised model such as a neural network. It is possible to calculate gradients and use them to direct the exploration of the solution space in order to find a solution \(\hat{z^*}\) that is close to the true optimal solution i.e. \(\hat{z^*} \approx z^*\)

Predict-then-Optimize

In the predict-them-optimize setting, the optimization process depends on some unknown values. To find the true optimal solution, the fitness function must be conditioned on the unknown values \(y\). As such [\ref{eq:ch0:opt}] must be reformulated to \(z^*(y) = \operatorname*{argmin}_{z \in Z} f(z, y)\). In a practical sense, this is infeasible as \(y\) is unknown. To circumvent this issue the model \(M_\theta\) can be used as \(x\) is available. Using \(M_\theta\) we can generate a value \(\hat{y}\) to be used in the optimisation.

\[\begin{aligned} \label{eq:ch0:pred-then-opt} \hat{z^*}(M_\theta(x)) = \hat{z^*}(\hat{y}) = \operatorname*{argmin}_{z \in Z} f(z, \hat{y}) \end{aligned}\]We use the notation \(\hat{z^*}\) here to denote it is the optimal solution found using predictions of \(y\). If the model perfectly reconstructs the true values of \(y\) then the solution found should be the true optimal solution i.e. \(\hat{z^*} = z^*\).

Note that \(dim(y) = D\) is typically high as all values needed to parametrise the optimisation problem are predicted. As such it is likely comprised of the output multiple models \(\hat{y} = [\hat{y_1}, \ldots, \hat{y_D}] = [M_\theta(x_1), \ldots, M_\theta(x_D)]\) where each model predicts an individual component e.g. travel time, supply, demand, and cost.

- Pros

This is simple to implement. Predictive models are fairly easy to learn. Can use any optimization method.

- Cons

No information about the optimization process is passed into the model.

It has been shown that model misspecification can lead to extreme errors when multiple correlated variables are combined in the optimization process [@Cameron2022]

Decision-Focused Learning

In the PTO setting, we noted that when \(\hat{y} = y\), should result in a solution where \(\hat{z^*} = z^*\). However, models learned using [\ref{eq:ch0:ml-learning}] will almost always have some error. The error induced by sub-optimal solution \(\hat{z^*}\) selected by the fact that \(\hat{y} \neq y\) is called the decision loss (DL). It is the value of the fitness function of the solution \(\hat{z^*}\) generated using predicted values, evaluated using the ground truth values of \(y\).

\[DL(\hat{y}, y) = f(\hat{z^*}(\hat{y}), y) \label{eq:ch0:dl}\]In a [DFL]{acronym-label=”DFL” acronym-form=”singular+short”} setting our goal is now reformulated. We aim to learn a model \(M_\theta\) that minimises the [DL]{acronym-label=”DL” acronym-form=”singular+short”}. For the dataset \(\mathcal{D}\), the learning process would be:

\[\theta^* = \operatorname*{argmin}_{\theta}\ \frac{1}{n} \sum_{i=1}^{n}\ DL(M_\theta(x_i), y_i)\]-

Pros Better performance.

-

Cons

Hard to implement; Can be more computationally expensive to learn; Still have an (expensive) optimization step